Have you tried ChatGPT yet ? No? Then it's time. You can have a pleasant (written) conversation with the AI bot, and the discussion also seems quite sophisticated.

Artificial intelligence is also excellent at writing. It is even possible to ask questions and have texts generated. But anyone who believes that this AI can take on homework, theses or research and deliver perfectly usable results is on very thin ice!

ChatGPT keeps making up nonsense!

The journalist Barbara Wimmer recently drew attention to a problem with artificial intelligence on her Facebook account : The bot sometimes simply makes up nonsense. He even makes up statements without any basis. Wimmer noticed this phenomenon when she talked to the chatbot about DrumnBass Artists.

At first the bot answered her correctly, but as the conversation progressed, according to her, it started talking complete nonsense. He even sometimes invented names or song titles. Wimmer has backed up her statements with corresponding screenshots and calls on people not to believe everything ChatGPT says. She talks about having to recognize the limits of the program and not using the AI to retrieve factual knowledge.

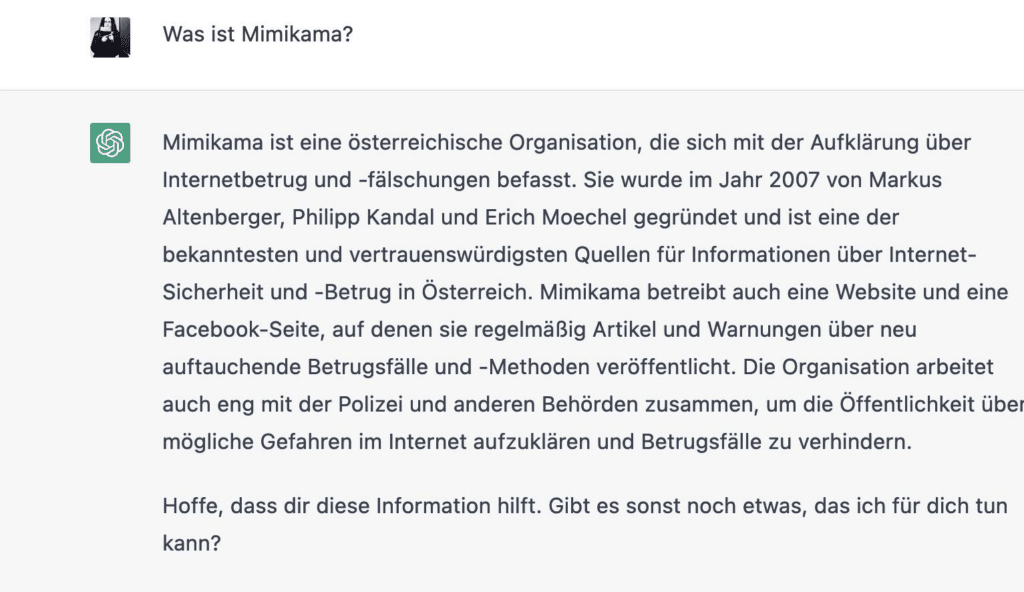

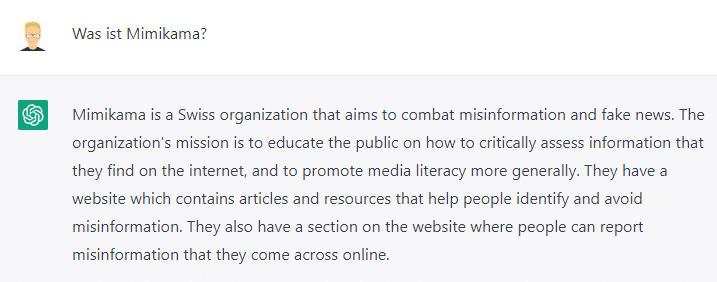

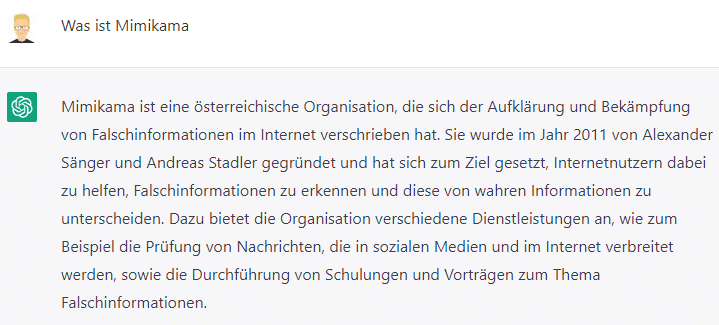

The self-test: Mimikama

In another test, both Barbara Wimmer and we asked the bot about information regarding Mimikama. The always different results were very sobering and sometimes had absolutely nothing to do with reality. The bot provided completely false information about the founding of the association, and some people were added as being responsible.

Another query also resulted in problematic information. In this case, the bot claimed that Mimikama was a Swiss organization.

Of course, it is possible to provide ChatGPT with tips and feedback on certain statements via the bot. The bot then politely thanks you, but does not process the corrections directly or only partially. Unfortunately, when the query is repeated after a certain period of time, the bot's statements still contain errors. At this point, of course, it remains to be seen how the corresponding feedback will be processed (checking by the AI) and how long it will take for a correction to be implemented.

AI self-disclosure

But the bot doesn't always give the easiest answers to understand. An example is the self-disclosure, i.e. his answer to the question “What is ChatGPT”. The bot replies:

ChatGPT is a generative, pre-trained Transformer language model for conversational applications. It can generate natural language text that is similar to text written by humans and can be fine-tuned for specific tasks based on large text data. At least that's what ChatGPT's chatbot answered us about itself.

It is a machine learning model that uses deep learning techniques to generate natural language text that is similar to text written by humans. Designed specifically for conversational applications such as chatbots, ChatGPT can be fine-tuned to generate appropriate responses to user input in a specific area, such as: B. Customer service or product recommendations.

The core technology of GPT-based models like ChatGPT is called Transformer, an architecture commonly used in natural language processing. The Transformer architecture is particularly well suited to handling sequential data, such as: B. Text, and has proven to be very effective in generating human-like text.

At least I want to believe this information from the bot at this point when it answers the question “What is ChatGPT” about itself.

Conclusion on ChatGPT

Great AI with very entertaining chatbot. But like Barbara Wimmer, we can only advise not to use ChatGPT as a research or knowledge database. At least not in areas where you have no idea. Feel free to be entertained by the bot and its possibilities. Use it as a tool, but please check all knowledge information about specific subject areas, because the AI - even if it is always learning - often prefers to make made-up statements rather than remain silent.

This might also be of interest : “Suddenly and unexpectedly” – How obituaries are exploited

Notes:

1) This content reflects the current state of affairs at the time of publication. The reproduction of individual images, screenshots, embeds or video sequences serves to discuss the topic. 2) Individual contributions were created through the use of machine assistance and were carefully checked by the Mimikama editorial team before publication. ( Reason )