Computer scientists at the University of Buffalo developed a tool that exposes fake portraits.

It's exciting how one software reveals what has been faked using another software - this is about portraits or "deepfakes". According to researchers at the University of Buffalo, this can be recognized by the reflections in the eyes, as the software they developed now proves.

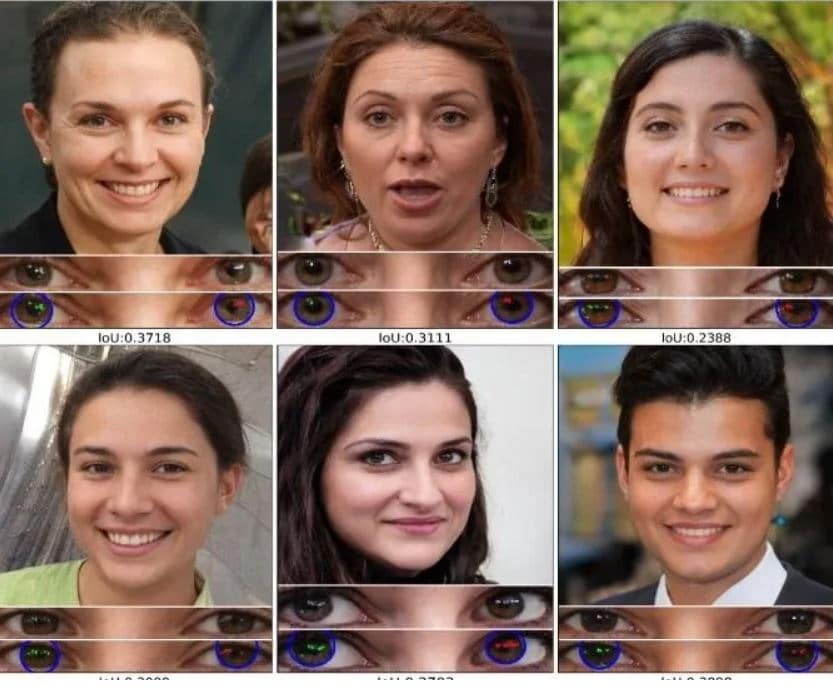

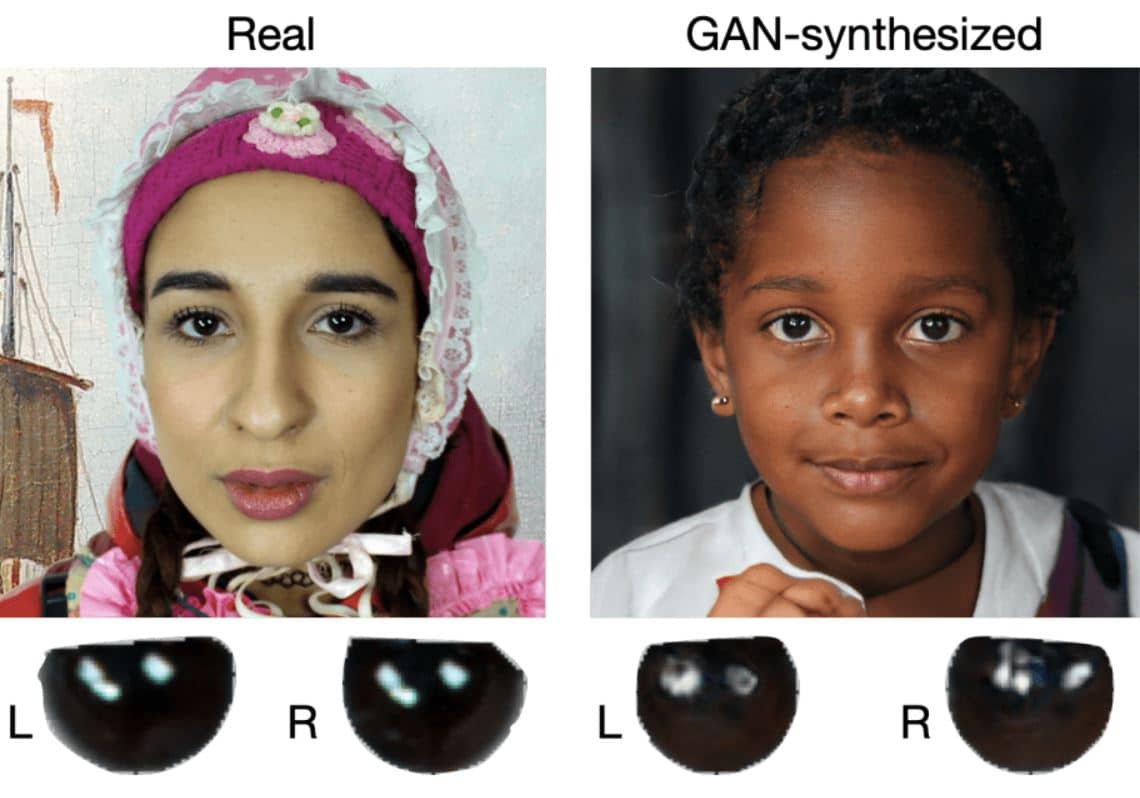

What is seen is reflected almost identically in both eyes - at least in terms of color and shape. University of Buffalo are using this fact to develop automatic detection of fake portraits. With these, the images in the two eyes are generally different. The tool proved to be reliable in 94 percent of all tests.

Different patterns

“The cornea is an almost perfect hemisphere and a good reflector. Very similar reflection patterns should appear in both eyes because they see the same thing. We usually don’t notice this when we look at a portrait,” says Siwei Lyu, professor at the Institute of Computer Science and Engineering.

However, the image evaluation software that has now been designed does notice differences.

The eyes in most fake images created using artificial intelligence have different patterns - a sure sign that they are fake. According to Lyu, this happens, for example, when a portrait is composed of several photos. He calls his software “Deepfake-O-Meter”.

When comparing the reflections in the eyes, fake portraits reveal irregularities that no one would notice just by looking at the photo.

Videos also work

In their experiments, the team experimented with real images from Flickr Faces-HQ and fake images from http://thispersondoesnotexist.com , which show lifelike faces but were created with the help of artificial intelligence. The software analyzes the images in the eyes with high precision and compares any differences in the shapes and colors of the reflections.

The analysis also works with videos, at least when they allow an unobstructed view of the eyes. Here, according to Lyu, it is particularly important to expose counterfeits. Politicians would be discredited with fake videos in which they express extreme views.

“Unfortunately, numerous fake videos are created for pornographic purposes and this causes serious psychological damage to the victims,” says Lyu.

already given thought article about the fact that portraits created by AI in a freely accessible database are a huge source of fake profiles.

[mk_ad]

Suitable for this

Sources: Pressetext.com , University of Buffalo , Press-News , TheNextWeb

Notes:

1) This content reflects the current state of affairs at the time of publication. The reproduction of individual images, screenshots, embeds or video sequences serves to discuss the topic. 2) Individual contributions were created through the use of machine assistance and were carefully checked by the Mimikama editorial team before publication. ( Reason )