Facebook go home, you're drunk

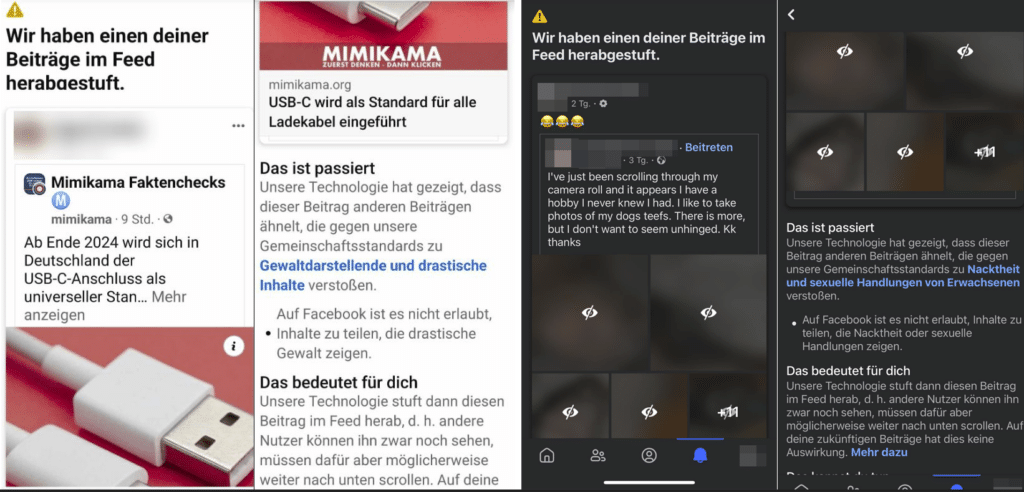

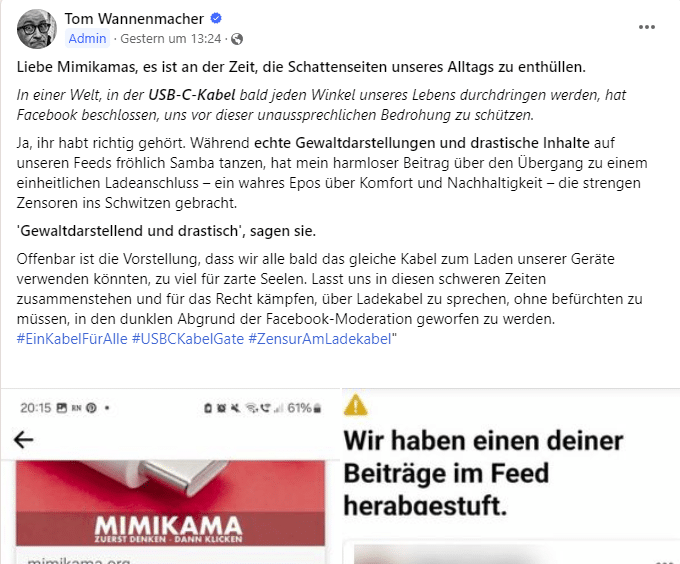

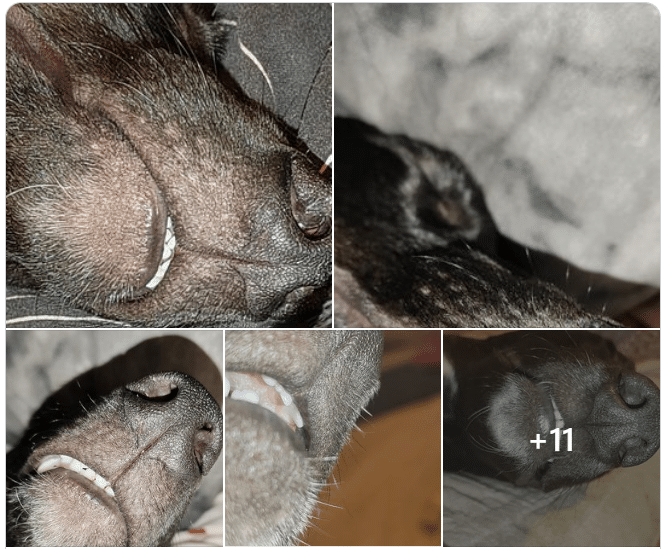

In recent days, Facebook has increasingly sent notifications to users informing them that certain posts in their news feed have been demoted for allegedly violating community standards. However, this automated action carried out by the social network's technology appears to produce significant errors in judgment. For example, innocuous images of dog snouts were misclassified as nudity and sexual acts, while USB ports were misinterpreted as violence and graphic content.

The first image is an article image on the topic “USB-C will be introduced as a standard for all charging cables” . We posted a post about Facebook’s “interesting” decision in our Facebook group.

The second posting was about dog snouts.

In these cases, with the best will in the world, we cannot explain which images or content the Facebook AI used to learn to recognize USB cables and dog snouts as violent or sexual, and we very much hope that this is a current bug. Perhaps adjustments to the technology used by Facebook are currently taking place, leading to such miraculous errors?

However, these incidents raise urgent questions about the reliability and efficiency of the algorithms used. When users raise an objection, the posts in question are re-examined and, unsurprisingly, in most cases are no longer classified as problematic. However, this approach leaves a bitter aftertaste: How can a platform known for its advanced technology make such serious mistakes?

Limits of Facebook's artificial intelligence

The current false positives reveal the limited ability of artificial intelligence (AI) to correctly interpret complex human content. While the intention to filter inappropriate content is commendable, the apparent inadequacy of the algorithms leads to frustration and confusion among users. The impression is that in some cases the technology is “over-motivated”, leading to unjustified restrictions.

The challenge of content moderation

However, the problem is not just limited to faulty restrictions. The platform is also criticized because it is often unable to effectively identify and remove really problematic content such as fakes, fraud or explicit representations. This apparent inconsistency in content moderation raises questions about Facebook's priorities and methods. How can blatant violations of terms of service go unpunished while harmless content is wrongly sanctioned?

Technology or human error?

Current events highlight the need for a more balanced approach to content moderation that includes both technological and human elements. Algorithms need to be constantly improved and adapted to the complex nuances of human communication. At the same time, it is important to ensure that human moderators serve as a safety net to compensate for the limitations of technology.

Questions and answers about Facebook's technical problems:

Question 1: What are the main problems with Facebook's automated content moderation?

Answer 1: The main problems are misjudgment by algorithms that flag harmless content as violations and the failure to effectively identify and remove actual problematic content.

Question 2: How do these issues impact the user experience?

Answer 2: They lead to confusion, frustration and a loss of trust in the platform as users face unjustified restrictions and problematic content remains on the platform.

Question 3: What is the cause of these misvaluations?

Answer 3: The reason lies in the limited capabilities of artificial intelligence, which cannot always correctly interpret complex human content.

Question 4: What could Facebook do to solve these problems?

Answer 4: Facebook could continually improve its algorithms and ensure that human reviewers act as a safety net to compensate for the limitations of the technology.

Question 5: Why is a balanced approach to content moderation important?

Answer 5: A balanced approach is important to ensure that the platform remains a safe and welcoming place for all users, without unjustified restrictions or overlooking problematic content.

Conclusion

The recent content moderation mishaps on Facebook underscore the need for a revised strategy that both increases the precision of technological solutions and incorporates human judgment. Such an approach could help restore user trust and maintain the platform as a safe space for sharing and interaction. It is to be hoped that Facebook will use these incidents as an opportunity to rethink and improve its practices.

We invite you to register for the Mimikama newsletter and attend our online lectures and workshops to learn more about digital security and the critical use of online content.

You might also be interested in:

Longing for simplicity: Rethinking social media

Meta in sight: Illegal drugs and network politics

AI future: Progress or apocalypse?

Notes:

1) This content reflects the current state of affairs at the time of publication. The reproduction of individual images, screenshots, embeds or video sequences serves to discuss the topic. 2) Individual contributions were created through the use of machine assistance and were carefully checked by the Mimikama editorial team before publication. ( Reason )